Imagine the need to move hundreds of gigabytes between S3 buckets or your server and S3 in a single command. Manually doing this via dashboards would take hours and be prone to errors. This is where the AWS S3 copy command fits in perfectly. It has the ability to provision file transfers effectively, scalably, and accurately. Understanding AWS S3 copy can help you to copy files around or in and out of S3 buckets for regular data transfers or to use it to back up data to or from S3.

Whether it’s lugging static sites around, punching out application logs, or syncing full data sets, getting to grips with the AWS S3 copy command is a must for any AWS-focused DevOps engineer. Replay With options for filtering, versioning, and permissions, you’ll have fine-grained control over your data. And, with features such as AWS S3 copy recursive, the directory structure is preserved with ease.

In this article, you’ll learn: how the command operates, typical use cases, advanced features with –recursive, use cases in practice, common mistakes to avoid, and how to build solid automation workflows around it.

Let’s dive in!

What is AWS S3 Copy And How Does It Work?

The AWS S3 copy is part of the AWS CLI and can be used to make bulk changes to your S3 bucket. It defaults to single files, but can also handle whole directories with the appropriate flags.

It has user-friendly parameters like AWS S3 cp source destination. It has options to specify ACLs, storage classes, and metadata, which make it suitable for data transfer and replication.

Get exclusive access to all things tech-savvy, and be the first to receive

the latest updates directly in your inbox.

How Can You Do an AWS S3 Recursive Copy?

Add –recursive for complete folders and everything that`s inside them. It is the easiest method I’ve found for copying (preserving structure and file hierarchy) of directories to/from S3.

Example:

aws s3 cp ./my-folder s3://my-bucket/my-folder --recursive

This command transfers all files and subfolders under my-folder to the specified bucket location, matching the source directory layout.

What is The Difference between AWS S3 copy and AWS S3 copy recursive?

An AWS S3 copy command on its own only works on one file. aws s3 copy recursive meaning it will walk through source dir and copy all children directories and content residing in them. Without –recursive only a single file is transferred, even if it’s a directory.

–recursive causes all the files to be transferred together and is faster and easier (especially for large folders) when you’re migrating or syncing.

How Do You Copy Files Between S3 Buckets?

You can call AWS copy from S3 by specifying the source and destination as S3 URL:s. Using –recursive , you can copy whole buckets:

Example:

aws s3 cp s3://source-bucket/ s3://dest-bucket/ --recursive

This copies all data from the source to the destination bucket, preserving the path structure.

Can you copy objects with metadata and storage-class flags?

Absolutely. You can preserve metadata or change storage classes directly:

aws s3 cp s3://my-bucket/file.txt s3://backup-bucket/file.txt \

--metadata-directive COPY \

--storage-class STANDARD_IA

This copies the object while keeping its metadata intact and moves it to the STANDARD_IA (infrequent access) storage class.

When Should You Use --recursive vs --exclude/--include?

Use --recursive when copying multiple files. Add --exclude and --include to filter specific patterns:

aws s3 cp . s3://my-bucket/ --recursive \

--exclude "*.tmp" --include "logs/*.log"

This ignores *.tmp files and only copies .log files in the logs/ folder. This selective copying reduces unnecessary data transfer and costs.

What Errors Should You Watch for When Using AWS S3 Copy?

| Error Type | Likely Cause | Solution |

|---|---|---|

AccessDenied | Missing IAM permissions | Attach s3:PutObject, s3:GetObject, etc. |

EntityTooLarge | File is >5GB without multipart | Use --expected-size or AWS multipart uploads |

InvalidLocationConstraint | Source and destination regions mismatch | Ensure both buckets are in compatible regions |

ConnectionTimeout | Network issues | Increase --cli-read-timeout or try retry logic |

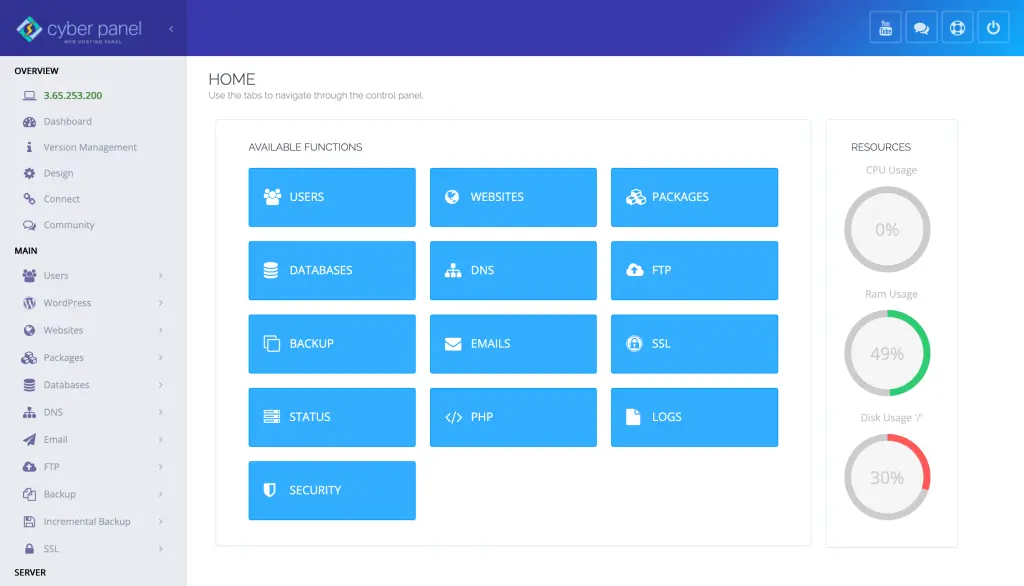

How CyberPanel Can Be Useful for S3 Copy Operations?

Admittedly, it’s a web hosting control panel, but CyberPanel supports some integration with AWS S3 workflows, being able to execute shell scripts and cron jobs, the latter of which you can provision on your servers:

- Use aws s3 cp –recursive on scheduled backups

- Keep SSL Certificates or Logs in your S3 bucket

- Export website files to S3 for CDN delivery automatically

- Sync server data with versioned S3 buckets for disaster recovery

This enables scalable backup and replication directly into your hosting environment.

People Also Ask

Can I copy between regions?

Yes, to source and destination buckets, including cross-region. Cross-region access must be permitted by ACLs.

Does aws s3 cp Preserve file timestamps?

No—S3 doesn’t store original timestamp. You can include it as metadata with --metadata

Can I copy versioned objects?

aws s3 cp only copies the most recent one. For version-specific copies, you can use s3api or S3 batch operations.

Final Thoughts!

Learning AWS S3 copy the right way – using recursive, filters, and metadata control – gives you the ability to transfer your data reliably, at a low cost, and with security. The command you’ll turn to for backing up servers with CyberPanel or spinning up a data pipeline, aws s3 cp.

Ready to auto-pilot your S3 operations? Start using aws s3 cp to experience efficient gravity-speed transfers and backups now!