Working with infrastructure as code, you usually pack application files, Lambda functions, or configuration directories into a zip or tar archive for deployment. This is where Terraform archive_file comes to the rescue. It accomplishes automatic appam packaging to be used directly in your Terraform configuration, so you don’t have to execute any command in the CLI or write any hook script.

With Terraform archive_file, you can very easily wrap up code or static files into a compressed file that could be provided to AWS Lambda, to an S3 bucket, or a serverless function. It occurs quicker, more reliably, and prevents deploying the wrong version of the files.

In this guide, we will also be looking into how to get the most out of the Terraform archive file, its use cases, syntax, best practices, and how it increases your automation pipelines. We’ll also cover secondary keywords, such as data archive_file terraform, archive_file terraform, and terraform archive file organically along the way.

What is the archive_file in Terraform and How to Use it?

In Terraform, the archive_file data source can be used to archive files (ZIP or TAR) from local files or directories. It’s a utility in the Hashicorp/archive provider that enables Terraform to archive files prior to providing them to other resources.

It has no infrastructure of its own, but is good for building deployment artifacts. When packaged, these ncode archives can then be used as artifacts in Terraform to deploy applications, such as serverless applications.

How Do You Use the archive_file Terraform Resource?

Here’s a simple example of how to leverage the archive_file data source:

Get exclusive access to all things tech-savvy, and be the first to receive

the latest updates directly in your inbox.

data "archive_file" "lambda_package" {

type = "zip"

source_dir = "${path.module}/lambda_code"

output_path = "${path.module}/lambda_package.zip"

}

type: Specifies the archive type (ziportar).source_dir: The folder whose contents will be archived.output_path: Where the archive will be written on disk.

You can also archive individual files instead of directories:

data "archive_file" "config_file" {

type = "zip"

source_file = "${path.module}/myconfig.json"

output_path = "${path.module}/myconfig.zip"

}

What Are Some Common Use Cases for archive_file in Terraform?

Common use cases include:

- AWS Lambda Deployments: Packing Python or Node. js zip it and then input the zip to aws_lambda_function.

- S3 Uploads: Zip up static website files before uploading to S3.

- Configuration Management: Package up configuration files for identical release on servers.

- Helm chart: Archive and upload the Helm chart for k8s workloads.

- Container Init Scripts: Bundle Docker init scripts to be shipped in the container.

This is why archive file terraform is a great tool for modern DevOps workflows.

How Does archive_file Operate On a Data Context?

data “archive_file” in Terraform is a data source, not a resource. It doesn’t create infrastructure, but produces data (in this case, an archive file) that you can pass to other resources.

Important fields in the archive_file output:

| Attribute | Description |

|---|---|

output_path | Full path to the generated archive file |

output_base64sha256 | Base64-encoded SHA-256 checksum of the archive |

output_sha | SHA1 checksum of the archive (for integrity checks) |

Difference Between archive_file and Terraform File Functions

- archive_file: It can even archive multiple files or directories into a compressed format. Good for Lambda or S3 uploads.

- file(): Reads the content of a file into a string.

- filebase64(): Reading and encoding the contents of a file in base64, used within inline secrets or init scripts.

When you want to put files into an archive and then compress the resulting archive, use archive_file. Feel free to use file() when dealing with one text file.

What Are the Best Practices for Terraform archive_file Usage?

Here are some tips to move as smoothly as possible — and keep your email safe and sound.

- Keep Source Files in Modules: Keep files in subdirectories under the module to keep it clean.

- Use and Output Paths are Consistent: Never write archives in the external module to avoid having to resolve conflicts.

- Avoid Large Files in Git: This is similar to clean history; do not check in large zip files. Have Terraform recompute them during apply.

- Trigger Archive Recreate on File Change: Use content hashes to get Terraform to understand when to re-create archives.

- Use with Provisioners Spraringly: If necessary, unzip files with local-exec or cloud function.

How to Trigger Changes in archive_file When Source Files Change?

Terraform will automatically compare the contents of source_file or source_dir. If the files inside change, the difference in file content hashes is noticed by Terraform and the archive is recreated.

To trigger recreation, update one of the files in source_dir, or jumble up the source_dir path (e.g., introduce a new file).

Example with triggers on AWS Lambda:

resource "aws_lambda_function" "my_lambda" {

filename = data.archive_file.lambda_package.output_path

source_code_hash = data.archive_file.lambda_package.output_base64sha256

# other configs

}

This ensures your Lambda is redeployed when the code archive changes.

How to Use archive_file with CI/CD Pipelines?

In continuous integration environments such as GitHub Actions or GitLab CI:

- Install the Terraform CLI and plugins.

- Run terraform init and terraform apply within the pipeline.

- Use archive_file to pack the deployment code on every run.

- Save the zipped output into an artifact repository, or directly deploy (Lambda/S3).

Do not store the zip files in Git. Have the pipeline recreate the artifact on every deploy with fresh source.

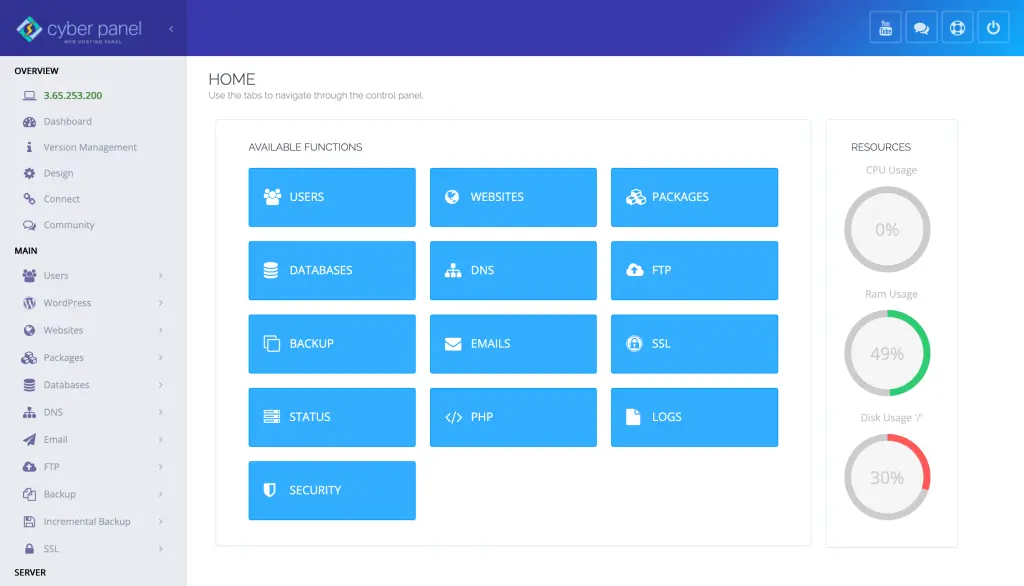

How Does CyberPanel Help in Handling archive_file Outputs?

CyberPanel is a web hosting control panel designed to manage your domain easily. If you provision and archive deployment files using Terraform:

- Serve Web Archives: Serve websites zipped using archive_file or config files to CyberPanel managed servers.

- Script Automation: Automatically deploy a zipped script or plugin on CyberPanel servers with Terraform.

- Backup Management: You can use Terraform to create backups for your app configs, store and manage them using the backup tools in CyberPanel.

- Custom App Deployments: Extract entry into the web directories managed by CyberPanel.

The union simplifies the backend setup and the frontend management of the web app.

FAQs: Terraform archive_file

How do I archive an entire directory with Terraform?

Use the source_dir attribute in the data “archive_file” to zip all files in a directory.

Is it possible to use the archive_file resource in AWS Lambda functions?

Yes. ArchiveFile to pack Lambda code and output_path, output_base64sha256 to aws_lambda_function.

What types of files does archive_file accept?

It’s also compatible with zip or tar as archive formats. Set using the type attribute.

Summary: Let archive_file Terraform Do Your File Packaging for You

Ultimately, terraform archive_file makes your life easier when it comes to managing deployment; it automatically packages your code(assets) as a ZIP or TAR file. Whether you are creating a lambda function or copying files into S3, or packaging configuration into a bundle, data archive_file terraform provides a consistent, reproducible, and automation-friendly way to archive building materials.

Integrated with Terraform workflows, with checksum outputs and directory scanning, it is an extremely useful tool to have in modern DevOps pipelines. If you are not already using “archive_file”, then now might be a good to start – secure, efficient, and clean deployments are only a zip file away.