Kubernetes is one of the most innovative open-source platforms that exist for automating the deployment, scaling, and operation of distributed applications across clustered machines. It provides the developer and IT teams with resilience and efficiency. So what does Kubernetes use? I will try to simplify it: Kubernetes gives you an abstraction layer over the management of containers, allowing you to scale applications across clusters while they perform tasks such as load balancing, health monitoring, and rolling updates.

You’ve likely heard the term what is K8—a short form for Kubernetes. This is so called due to the presence of the eight letters between the “K” and the “s” in the word. This is just an invented name. If you are still asking yourself what is a Kubernetes cluster, you should know that a Kubernetes cluster is an aggregation of nodes that run together a group of applications within the container, distribute workloads, and maintain high availability of them.

In this article, we will introduce you to a complete explanation of Kubernetes, digging deeper into what are the components of Kubernetes, what is Kubernetes used for, introducing some technical meaning of Kubernetes, and real-life examples of what it exactly means.

What is Kubernetes used for?

Some of the core services offered by Kubernetes in this modern technological space, especially for cloud-based and microservices applications, have been discussed here. What Kubernetes is used for and why it has become an essential tool in the DevOps process is shed light upon below:

1. Automatic Deployment and Scaling

Kubernetes automates the deployment of containerized applications. You may be running a single application or managing large microservices architectures, and Kubernetes will schedule and monitor your workloads while scaling your applications as needed.

For example, you can specify how many replicas of a certain application should be running, and Kubernetes will make sure this desired state is in effect. If one node crashes or becomes unavailable, Kubernetes self-heals by redeploying containers on another node, thus virtually giving zero downtime. That’s what it’s called the self-healing feature of Kubernetes.

Get exclusive access to all things tech-savvy, and be the first to receive

the latest updates directly in your inbox.

2. Service discovery and Load balancing

Kubernetes provides an IP address for each application or service. However, Kubernetes itself can automatically distribute incoming traffic across multiple instances of the same application, loading that work and preventing any one instance from being overloaded, thus enhancing reliability.

For instance, where you have pods running an NGINX web server; Kubernetes can route the requests to the least loaded pod for optimal performance.

3. Rolling Updates and Rollbacks

Kubernetes makes application updating smooth. The application can be rolled out updating without needing downtime. If something goes wrong, then, Kubernetes rolls back to the previous working version. This comes in very handy in a production environment where uptime is of paramount concern.

4. Storage Orchestration

Kubernetes has an abstraction to automatically provision storage systems such as AWS EBS, Azure Disk, or Google Cloud Storage. Kubernetes makes the underlying storage infrastructure invisible to applications and assures them of persistent storage.

For example, you may have a database running on a Kubernetes cluster, where you can set up persistent volumes for the database to use even if its pods get rescheduled to different nodes.

5. Running Containers in Multiple Environments

Kubernetes provides an ideal multi-cloud or hybrid-cloud strategy. It can be run on any infrastructure: on-premises, in a public cloud like AWS, Google Cloud, or Azure, or across multiple clouds. This is one of the reasons why Kubernetes is so popular today.

Components of Kubernetes Explained

Understanding what is Kubernetes used for requires an in-depth understanding of its structure. A Kubernetes cluster possesses a control plane and a worker node, which may carry out important functions.

1. Control Plane

The control plane manages an entire Kubernetes cluster. It schedules workloads, tracks the health of applications, and tracks the state of a cluster. The main parts of the control plane include:

API Server: The API server is the front end of the Kubernetes. All the administrative tasks will be performed using REST API calls to the former.

Scheduler: This module allocates jobs to various worker nodes based on resource availability.

Controller Manager: It enforces the desired state of the cluster to the actual state of the cluster.

Etcd: This is a distributed in-memory key-value store that maintains all configuration and state of the cluster.

2. Worker Nodes

Actual workloads, or containers, run on worker nodes. Each node contains the following:

Kubelet: This is the agent, which runs on each node and communicates with the control plane to ensure the appropriate containers running on a given node.

Kube-proxy: Handling networking on the cluster; therefore, it ensures communication between pods.

Container Runtime: Software that runs containers; for example, Docker.

Real-World Example: Running an NGINX Application

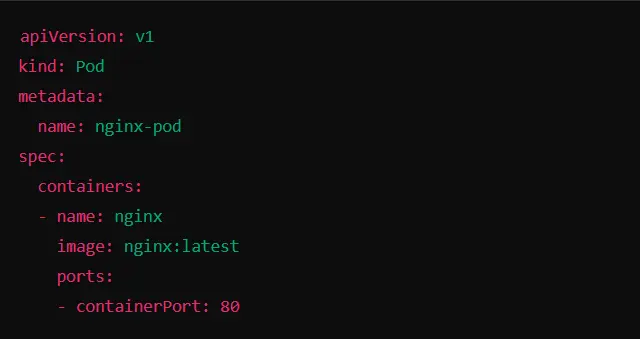

Next is a real-world example of what is Kubernetes used for in action. Suppose you want to deploy a simple NGINX web server inside a Kubernetes cluster.

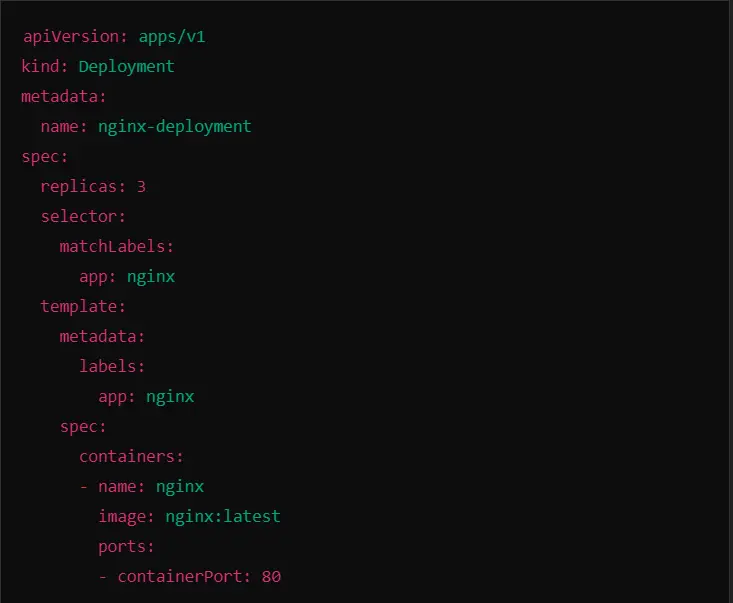

Here is the configuration for an NGINX pod:

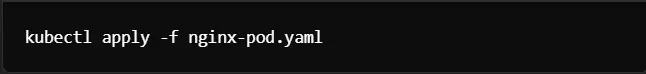

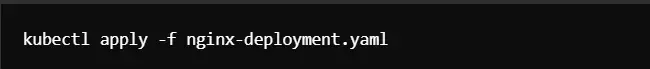

To apply this configuration in a Kubernetes cluster, you would run:

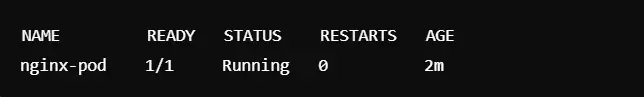

After deployment, you can verify the pod is running by using this command:

It’s a simple example, but it shows how Kubernetes is making the whole application deployment/management process automated. Suppose the above pod fails; Kubernetes will reboot the pod itself. You might have heard of self-healing ability for this.

What is a Kubernetes Cluster?

A Kubernetes cluster consists of machines referred to as nodes that are used to run applications packaged into containers. One machine or a set of machines can be a master, while others are worker nodes. Control plane components of this cluster would run on the master node, while containers would run on the worker nodes.

How Kubernetes Cluster Works:

Control Plane: It manages the orchestration of workloads.

Worker Nodes: Run applications and deal with the communication to/from the network.

What are Kubernetes? Meaning and Use Cases

Now, we are going deeply into your concern “What is Kubernetes used for?” The meaning of Kubernetes makes sense in the context of its uses. The term “Kubernetes” is taken from Greek, where the word for pilot or helmsman gives a fair description of what the tool does: make complex applications steer toward peak performance and dependability.

Here are a few use cases in which Kubernetes shines:

1. Microservices Architecture

Especially, with microservices, every service is going to be developed, deployed, and scaled independently. Developers find it very easy to manage the services because they can package them in containers and deploy them to a cluster.

2. Continuous Integration/Continuous Deployment (CI/CD)

For teams working on CI/CD, Kubernetes is a perfect bet. It supports automated testing and deployments across multiple environments. This ensures updates will be rolled out with minimal downtime.

3. Big Data and Machine Learning

Kubernetes is increasingly being used for big data processing and machine learning workloads. Distributed computing frameworks like Apache Spark can be deployed on Kubernetes with flexible scaling of compute resources.

4. Hybrid and Multi-Cloud Environments

A key strength of Kubernetes relates to flexibility in running hybrid or multi-cloud environments: this will be very helpful for organizations seeking to avoid getting locked into a single vendor or desiring workload balance between on-premises data centers and the public cloud.

Kubernetes in Action: Scaling an Application

Another nice feature of Kubernetes is that it can scale applications automatically. Here’s an example of scaling the NGINX pod we deployed earlier.

First, you define a deployment:

This will create three replicas of the NGINX pod. To apply this deployment:

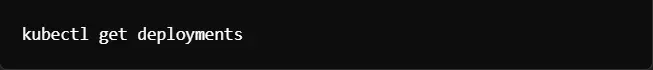

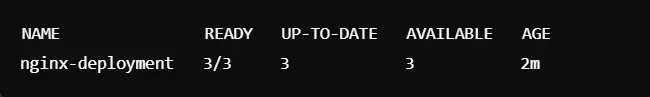

You can check the status of the deployment now:

Kubernetes always runs three replicas of NGINX, so whenever one pod fails, it automatically creates another to maintain the desired number of replicas.

Role of CyberPanel in Kubernetes Management

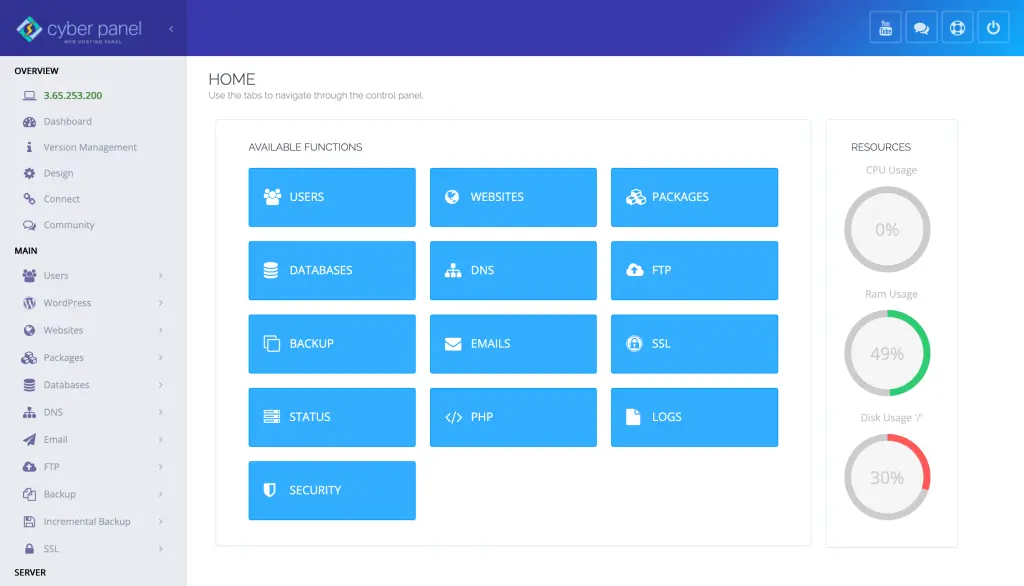

CyberPanel is a robust, open-source web hosting control panel. CyberPanel does not natively manage Kubernetes; however, it plays a key role in the hosting environment that often runs in parallel with the Kubernetes management applications.

How CyberPanel Enables Kubernetes:

Easy deployment: CyberPanel simplifies and enables developers to deploy applications as well as resource management with ease. Kubernetes will manage the orchestration of the containers while CyberPanel manages the server and all its resources as a one-stop solution for hosting.

Resource Management: If you run your applications in the container using a Kubernetes cluster, CyberPanel also manages all your web hosting features like DNS management, SSL certificates, and email configurations integrated into a Kubernetes-managed environment.

Managing Server Resources: CyberPanel’s GUI makes easy resource management on servers. You can use it to optimize the performance of a node in a Kubernetes cluster. In this manner, it lets you get the best out of your infrastructure.

Automation: Similar to Kubernetes, CyberPanel also manages many server administration tasks including backups and updates on automatic. Using both together can be a great way to minimize manual intervention and generate efficiency in your hosting and development environments.

Compatibility with Containers: While CyberPanel is essentially a web hosting panel, it manages to work properly along with container technologies like Docker deployed in a Kubernetes cluster. This opens up the avenue for developers to manage their applications both on CyberPanel and on Kubernetes.

Real-world example: Deploying with Kubernetes and CyberPanel

Suppose you’re running a web application in a Kubernetes cluster. CyberPanel makes it very simple to put your website on the hosting application, but the intrinsic complexity of running an application that spreads across multiple containers is handled by Kubernetes, ensuring efficient workload distribution.

For example, you can deploy a website using CyberPanel and Kubernetes ensures the scalability and management of containers. Such synergy between CyberPanel and Kubernetes helps the developer manage everything from the infrastructure to the application layer.

Frequently Asked Questions For “What Is Kubernetes Used For”

1. What is Kubernetes?

Kubernetes is an open-source system for automating containerized applications’ deployment, scaling, and management. It ensures applications run in the same way whether in the cloud, on-premises, or a hybrid environment.

2. What is a Kubernetes cluster?

A Kubernetes cluster is a collection of machines, known as nodes, that work together to run containerized applications. A cluster always contains at least one control plane or master and multiple worker nodes that can execute applications.

3. What is K8?

K8: This is a short form meaning Kubernetes. The number 8 is used because there are eight letters between the letter “K” and the letter “s” in the term Kubernetes. Therefore, “K8” is used by most developers when referring to the term Kubernetes.

4. How does Kubernetes manage containerized applications?

It deploys the applications across a cluster of machines following set rules: it can automate lots of tasks, for instance, load balancing and resource allocation as well as doing health checks that give you a guarantee of high availability and recovery capability in case there is a fault.

5. What is Kubernetes used for?

It is used greatly because it offers the ease of automatic scalability of containerized applications and gives good high availability. Kubernetes abstracts the complexity of managing containers on clusters so that developers and IT teams are facilitated to maintain more robust, scalable applications.

6. Is Kubernetes only for large-scale applications?

While Kubernetes is a shining star in big environments, it can be used on small projects as well. Flexibility makes it suitable for an application of any size, whether it’s a small startup or a huge enterprise.

7. What are the Kubernetes components?

The control plane and the worker nodes constitute the two major components of Kubernetes: the latter is composed of the Kubelet, container runtime, and Kube-proxy, whereas the former consists of an API server, a controller manager, etc, and a scheduler. Interconnected, these control applications and ensure smooth working.

Culmination

Kubernetes and CyberPanel: A New Road to Application Management

To sum up, Kubernetes changes the story when it comes to the management of applications containerized. Such applications can be automatically deployed, scaled, and recovered.

Understanding what Kubernetes is used for further illustrates how it brings efficiency all the way from microservices to multi-cloud environments.

CyberPanel brings streamlined and intuitive server management that goes well with the management system of Kubernetes. CyberPanel makes web hosting easy and hassle-free, with its clean interface. Such a clean interface is perfect as a complement to the powerful orchestration in Kubernetes.

Now let’s start your journey with CyberPanel and seamlessly integrate both your Kubernetes and hosting environments with it!